Understanding “Canary” of Canary Deployment.

Before diving into the fact of - how Canary Deployment helps us in understanding the actual end-user experience, let’s begin our discussion by learning the significance of its name. Well, if somewhere your mind is comparing it with “Canary birds,” then you’re on the right track! In British Mining History, these humble birds were used as “toxic gas detectors.” While mining for coals, if there is any way emission of toxic gasses like Carbon Monoxide, Nitrogen dioxide, etc, these birds alerted the miners about its presence as these birds are more sensitive to airborne toxins as compared to human beings. Similarly, the DevOps Engineers perform a canary deployment analysis of their code in CI/CD pipeline to gauge any possible errors present. However, here the figurative canaries are a small set of users, who will experience all the glitches present in the update.

Let’s define Canary Deployment!

Canary deployment is a process or technique of controlled rolling of a software update to a small batch of users, before making it available to everyone. Thereby, reducing the chances of widescale faulty user experience. When the update is examined and feedback is taken, this feedback is again applied and then released on a larger scale.

Steps Involved in Canary Deployment Strategy

To keep our customers happy and engaged, it's important to roll out new updates from time to time. Not ignoring the fact that every new change introduced, might have an error or two attached to it, we need a Canary Release Deployment analysis before releasing it to all our customers.

With the level of competition in the market, any bug left unattended is going to attract customers’ displeasure and might cause a good loss of the Company’s reputation. Starting off by understanding the basic structure of the canary deployment strategy. We can elucidate it under the following headings:

- Creation

- Analysis

- Roll-out/Roll-back

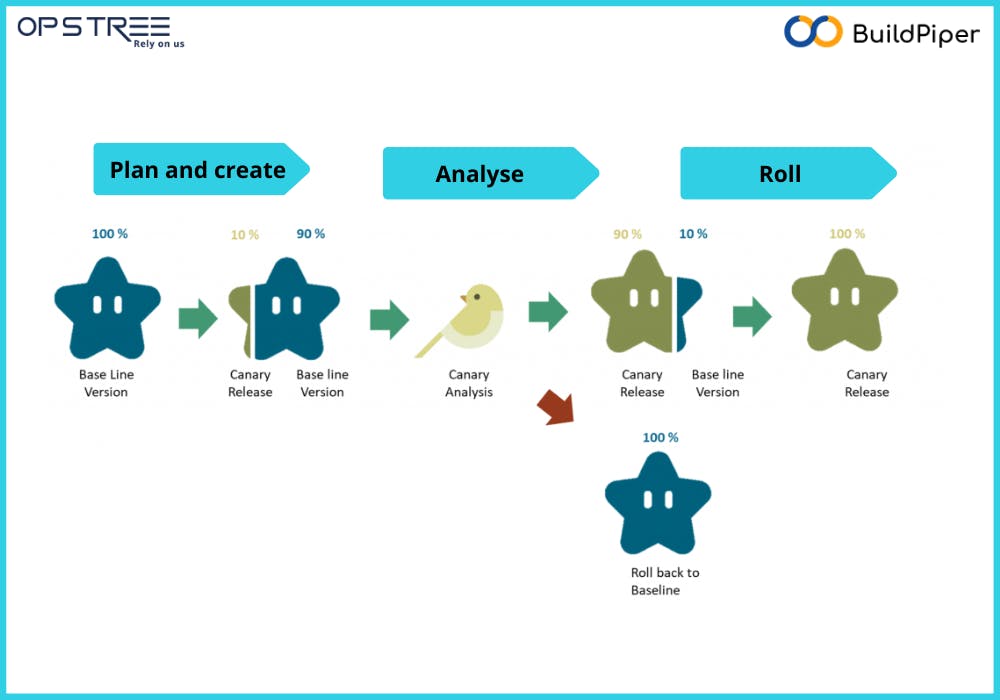

Creation

To begin with, we need to create a canary infrastructure where our newest update gets deployed. Now, we need to direct a small amount of traffic to this newly created canary instance. Well, the rest would be still continuing with the older version of our software model.

Analysis

Now, it's showtime for our DevOps team! Here they need to constantly monitor the performance insights received - data collected from network traffic monitors, synthetic transaction monitors, and all possible resources linked to the canary instance. After the very awaited data gets collected, the DevOps teams then start comparing it with the baseline version’s data.

Roll-out/Roll-back

After the analysis is done, it's time to think over the results of the comparative data, and whether rolling out a new feature is a good decision, or is it better to stick back to our baseline state and roll back the update.

Well, then how are we benefitted?

Zero Production Downtime

You know it when there’s small traffic, and the canary instance is not performing as expected, you can simply reroute them to your baseline version. When the engineers are conducting all sorts of tests at this point, they can easily pinpoint the source of error, and can effectively fix it, or roll back the entire update and prepare for a new one.

Cost-Efficient - Friendly with smaller Infrastructure

The goal of Canary Release Deployment analysis is to drive a tiny amount of your customers to the newly created canary instance - where the new update is deployed. This means you’re using a little extra of your infrastructure to facilitate the entire process. In addition to that, if we compare it with the blue-green deployment strategy, it requires an entire application hosting environment for deploying the new application. As compared to blue-green deployment, we don’t really have to put in our efforts in operating and maintaining the environment, in canary deployment, it's easier to enable and/or disable any particular feature based on any criteria.

Room for Constant Innovation

The flexibility of testing new features with a small subset of users, and being able to receive end-user experience immediately, is what motivates the dev team to bring in constant improvements/updates. We can increase the load of the canary instance up to 100% and can keep track of the production stability of the enrolled features.

Do we have any Limitations?

Well, everything has limitations. What’s important for us is to understand how to counteract them.

Time-consuming and Prone to Errors

Enterprises executing canary deployment strategies, perform the deployments in a siloed fashion. Then a DevOps Engineer is assigned to collect the data and analyze it manually. This is quite time-consuming as it is not scalable and hinders rapid deployments in CI/CD processes. There might be some cases where the analysis might go wrong and we might roll back a good update or roll forward a wrong one.

On-Premise Applications are difficult to update

Canary Deployment looks like an appropriate and quite a possible approach when it comes to applications present in Cloud. It is something to think about - when the applications are installed on personal devices. Even then, we can have a way around it by setting up an auto-update environment for end-users.

Implementations might require some skill!

We are focussing right now on the flexibility it offers to test different versions of our application, but we should also bring our attention to managing the databases associated with all these instances. For performing a proper canary deployment and to be able to compare the old version with the new one, we need to modify the schema of the database to support more than one version of the application. Thereby, allowing the old and new versions to run simultaneously.

Wrapping up…

With an increasing interest of Enterprises to perform canary deployment analysis, it is to note that we need to counteract the limitations and make processes smoother. We need some good continuous delivery solution providers or Managed Kubernetes orchestrators to automate certain functionalities to keep errors at bay, and also integrate security at every stage of development.